Storage is another fundamental core IT infrastructure component that is found in both on-prem and cloud datacenters. In this post we will learn the fundamental concepts and basics of Storage infrastructure. We will explore common storage devices and systems and how they are attached and are accessed both in the cloud and local datacenters.

In the previous posts we learned the basics and fundamental concepts of Cloud Computing and Virtualization. In this post we will learn the below topics about Storage infrastructure – a core component in Cloud Computing.

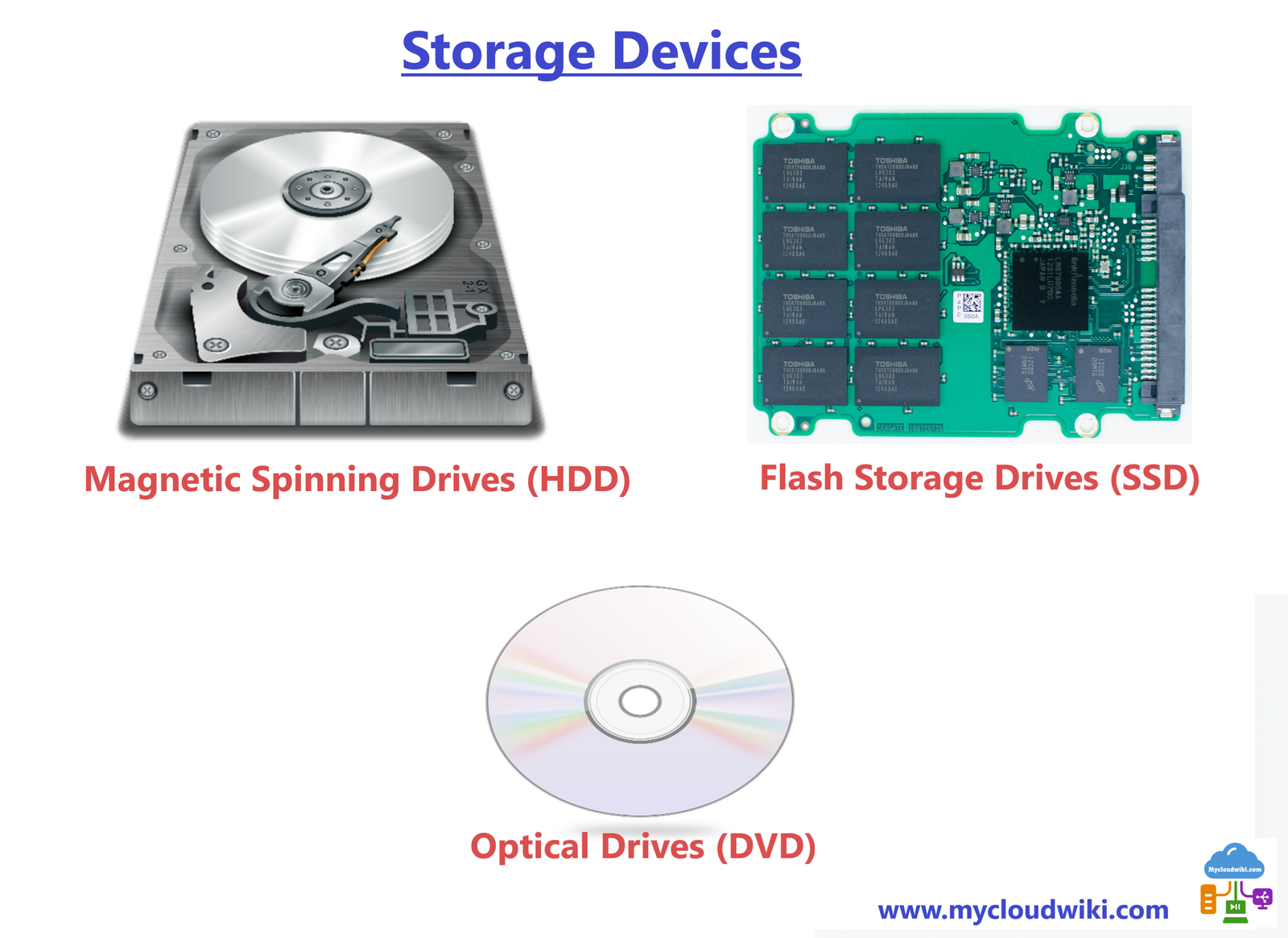

What is a Storage Device

A Storage device is nothing but a non-volatile recording media on which information can be persistently stored. Storage may be on internal hard drive, removable memory cards, or external magnetic tape drive connected to a compute system. Based on the nature of the storage media used, storage devices can be broadly classified as

- Magnetic Spinning Drives – Hard disk drive (HDD) and magnetic tape drive.

- Optical storage Drives – Blu-ray, DVD, and CD.

- Flash-based Drives – Solid state drive (SSD), memory card, and USB thumb drive (or pen drive).

Magnetic Spinning Drives

- These are the traditional hard drives consists of a series of rapidly spinning platters with a magnetic material on each of the platters. HDDs and Tape storage devices are the common examples of magnetic spinning Drives.

- A read/write head moves along the platters for storing and retrieving bits of storage information on the magnetic media.

- Many different types of spinning drive designs have evolved with various physical sizes, and storage densities. However, HDD is the most commonly and widely used magnetic storage medium in both on-prem and Cloud Computing world.

- HDD will be cabled to the computer system network with a standardized system that allows for interoperability and ease of use as well as performance.

Optical Storage Devices

- Most optical devices are flat, circular storage medium made of polycarbonate with one surface having a special, reflective coating such as aluminum.

- These disk drives uses the laser technology to record data on the disc in the form of microscopic light and dark dots.

- The laser reads the dots, and generates electrical signals representing the data. The commonly used optical disc types are CDs, DVDs and Blu-ray disc (BD). These discs can be recordable or re-writable disks.

- Recordable or read-only memory (ROM) discs have Write Once and Read Many (WORM) capability and are typically used as a distribution medium for applications or as a means to transfer small amounts of data from one system to another.

Flash Storage Drives

- Solid State Drives (SSDs), memory card and USB drives are the examples of Flash drives.

- Unlike the spinning platter of traditional HDDs, SSD storage devices use silicon for storage.

- Also, SSD drives can have very fast read and write times, which can increase server performance in cloud computing datacenters.

Learn more about Storage devices used in Storage Arrays

Types of Storage Interfaces

Storage Devices uses various types of interface technologies to communicate with the servers over the network. Over the years, multiple interface types are designed to improve the performance and data access speed based on the data access patterns form the storage devices. Below are some of the commonly used storage interfaces.

Small Computer Systems Interface (SCSI)

- SCSI is a parallel interface technology found in PCs or server systems and it has been in use for decades and is still popular today.

- Many types of SCSI interfaces have been evolved over the years with the variation in bus width and data transfer speeds. SCSI is a data bus that allows for multiple devices to be connected on the same cable.

- A combination of these interface types known as Serial Attached SCSI (SAS) allows for the SCSI commands to be sent over a serial interface.

- SAS is a direct replacement for the SCSI parallel cable and offers much higher transfer rates.

Advanced Technology Attachment (ATA)

- ATA is a standardized interface for storage to system interconnections and it is commonly found on storage devices that are directly connected to a server or computer.

- ATA drives are integrated with the controller which are also called as Integrated Drive Electronics (IDE) interfaces.

- Commonly used drive designs with ATA/IDE interfaces are Hard Disk drives, CD-ROM, DVD, and tape drives.

Serial Advanced Technology Attachment (SATA)

- SATA is a current technology which is found in many production data networks.

- It is a serial link connection to the drive instead of the older parallel interface found in SCSI and IDE.

Fibre Channel Interface (FC)

- This is the another common type of interface found in todays datacenters supporting cloud applications and large enterprises.

- Fibre Channel is a SAN protocol that encapsulates the SCSI command set and allows for cloud servers to access storage resources remotely.

Read more about Different SAN protocols and architectures

Disk Performance and Access Speeds

- Disk performance and access speed are the important factors which differentiates the usage of above storage devices and interfaces. The measurement of performance is defined as the transfer speed of data to and from the storage device to the device initiating the storage request.

- Many factors come together to affect the performance of a disk system. The time it takes to find the data on the disk, known as the seek time which is an important metric.

- The actual speed of the bus also needs to be taken into consideration, and the disk rotation speed has a significant effect on the transfer rate of the data as well.

- The type of redundancy techniques that are implemented can play a significant role in disk performance.

- The read/write speed of the data to a storage device can also have a major impact on overall system performance.

- Storage performance can also be measured based on the applications that require high read performance, whereas others may rely on fast writing of data to storage.

Introduction to Cloud Object Storage

Cloud object storage is commonly found in cloud storage services and offerings and is different from the common file storage technologies such as file and block modes. This type of storage is commonly used in Big data applications in the cloud datacenters.

Data (such as a common file) is paired with metadata and combined into a storage object. Instead of the hierarchical structures found in traditional block and file storage systems, objects are stored in a flat file. With the pool of objects, they are called by their object IDs.

Also Read: Key Features of Object based Storage Systems

Object ID

An object ID is a the unique identifier of the object globally which points to the stored piece of data. Object storage does not use block or file based storage techniques for locating the data. It uses the object ID to locate the data or metadata in the filesystem of the cloud storage arrays.

Metadata

Metadata is a part of a file or sector header in a storage system that is used to identify the content of the data. It is used in big data applications to index and search for data inside the file. Metadata can consist of many different types of information, such as the type of data or application and the security level. Object storage allows the administrators to define any type of information in metadata and associate it with a file.

Policies

In object storage, policies defines the rights to perform operations such as archive, backup, security, creation, and deletion of storage objects.

Replicas

Replicas are exact copies of a stored item; they are used to place data closer to the points of access such as geographical preferences. When a replica is created, an exact clone of the original data is created in the storage arrays.

Access Control

Access controls defines who can access the storage objects and what they are allowed to do. For example, a user or a user groups can be assigned only read or write or both access to a file. Other types of access control rights to an object are write, delete, and full control of the object.

Do you know How object based storage is used in Cloud Storage Services ?

Storage Management Techniques

Managing storage devices and storage systems is a critical task and these tasks are generally performed by a skilled storage administrator. Following storage management techniques should be known and understood in order to cost efficiently utilize the available storage resources to meet the organization’s storage needs.

Storage Tiering

Certain applications may need high read or write speeds for better performance. Some data may need to be accessed very frequently, whereas other data can be stored and accessed by an application. Based on various data criticality requirements, different storage tiers can be defined and assigned to best meet the levels of storage in the datacenters.

Tier 1 storage

- This tier is used for the most critical or frequently accessed data and is generally stored on the fastest, most redundant, or highest quality media available.

- Tier 1 storage is configured with one or more disks to fail with no data being lost and access still available.

- Tier 1 storage arrays have the greatest manageability and monitoring capabilities and are designed to be the most reliable.

Tier 2 storage

- This storage tier is generally used for data that does not have fast read or write performance requirements or that is accessed infrequently.

- Tier 2 data can use less expensive storage devices and can even be accessed over a remote storage network.

- Some examples of Tier 2 data are email storage, file sharing, or web servers where performance is important but less expensive solutions can be used.

Tier 3 storage

- This storage tier is used for data that is often at rest and rarely accessed, or backups of Tier 1 and Tier 2 data.

- Examples of Tier 3 media are DVD, tape, or other less expensive media types.

Learn More about Storage Tiering and Storage policies

There can be many different tiers created in a storage design in the cloud. More than three tiers can be implemented based on the complexity and the requirements of the storage system.

The higher the tier, the more critical the data is considered to be and the more the design focuses on faster performance, redundancy, and availability.

Storage policies can also be established to meet the data requirements of the organization, thereby assigning data to be stored in the proper tier.

By properly classifying the data needs in a multi-tiered storage system, organizations can realize cost savings by not paying for more than what is required. This strategy is commonly used in cloud storage deployments and is one of the major advantages of using cloud.

Also Read: How Deduplication technique is used to reduce storage costs

Storage Redundancy (RAID)

The term RAID has several definitions and is often referred to as a Redundant Array of Independent Disks. By combining physical disks, redundancy can be achieved without having to sacrifice performance.

Large storage volumes can be created by grouping the individual disks. When a storage logical unit spans multiple hard drives, increases in performance, speed, and volume size can be achieved.

Below are the different types of RAID levels which are commonly used in current IT data centers for various data requirements.

RAID 0

- RAID 0 uses striping, where a block of data to be stored and spread it across two or more disks.

- The file is stored across more than one hard drive by breaking a file into blocks of data and then stripe the blocks across disks in the system.

- Although RAID 0 is simple, it provides no redundancy or error detection, so if one of the drives in a RAID 0 array fails, all data in lost.

- However, since RAID 0 allows for parallel read and write operations, it is very fast and is often found in storage-intense environments such as many database applications where storage speed can often be a bottleneck.

RAID 1

- In RAID 1, a complete file is stored on a single disk and then a second disk is used to contain an exact copy of the same file.

- By storing the same file on two or more separate disks, complete data redundancy is achieved.

- Another advantage of RAID 1 is that the data can be read off two or more disks at the same time, which allows for an improvement of read times over reading off a single disk as the data can be read in parallel. However, write performance is reduced since the file needs to be written out twice.

- RAID 1 is the most expensive RAID implementation since 50 percent of the storage space is for redundancy and not usable.

RAID 1+0

- Two RAID levels are combined to create two separate RAID 1 arrays and then use RAID 0 to mirror them.

- This is often referred as RAID 1+0. With RAID 1+0 the data is mirrored and then the mirrors are striped.

- This configuration offers higher performance than RAID 1 but the trade-off is a higher cost.

RAID 0+1

- This RAID level is the opposite of RAID 1+0. The difference involves the order of operations.

- With RAID 0+1, the stripe is created first and then the stripe is written to the mirror

RAID 5

- RAID 5 stripes of file data and parity data are stored over all the disks, there is no longer a single parity check disk or write bottleneck.

- RAID 5 dramatically improves the performance of multiple writes since they are now done in parallel.

- If any disk in a RAID 5 array fails, the parity information stored across the remaining drive can be used to re-create the data and rebuild the drive array.

- RAID 5 has read and write performance close to that of RAID level 1. However, RAID 5 requires much less disk space compared to other RAID levels and is a very popular drive redundancy implementation found in cloud datacenters.

- The drawback of using RAID 5 arrays is that they may be a poor choice for use in write-intensive applications because of the performance slowdown with writing parity information. Also, when a single disk in a RAID 5 array fails, it can take a long time to rebuild a RAID 5 array. Also, during a disk rebuild performance is degraded.

RAID 6

- RAID 6 is an extension of the capabilities of RAID 5. The added capability offered in the RAID 6 configuration is that a second parity setting is distributed across all the drives in the array.

- The advantage of adding the second parity arrangement is that RAID 6 can suffer two simultaneous hard drive failures and not lose any data.

- However, there is a performance penalty with this configuration, the disk write performance is slower than with RAID 5 due to the need to write the second parity stripe.

Also Read: Why Caching is an important technique to consider

Storage provisioning Techniques

Following techniques can be leveraged before provisioning and allocating a storage space to servers. These techniques make sure that the provisioned storage space is secure & highly available.

Storage LUNs

- Storage LUNs needs to be created and mapped to the server before a server can access the storage in a SAN environment.

- The actual process of creating a LUN depends on the manufacturer. For example, Microsoft’s graphical interface is much different than the command-line utilities found in most Unix or Linux releases.

- In Windows Server, to create a LUN in the console you would click LUN Management. Then you would click Create LUN in the Actions pane and just follow the wizard prompts to provision a LUN.

Network Shares

- In general, storage is consolidated in a small number of large storage systems. This allows for centralized control and management of the storage infrastructure.

- The SAN administrator will set up sharing on the drive controllers that defines who is allowed to access certain storage resources and what they are allowed to do to the data they are accessing.

- Setting up network shares can be done manually in the on-prem datacenters, and in the case of cloud datacenter, by using automated provisioning tools that assign the storage when the cloud customer requests computing resources and specifies the type of storage required.

- The shared files generally are seen as folders in the operating system or appear as a locally attached drive. Some of the common shared types are the Server Message Block (SMB) utilized by Microsoft or the Network File System (NFS) common in all Linux distributions.

Also Read: Techniques for storage capacity optimization

Zoning and LUN Masking

- Zoning and LUN masking techniques are used to restrict storage on the network to a specific server or a small group of servers.

- LUN masking is the process of making storage LUNs available for access by some host computers and restricted to others in the SAN.

- Grouping servers into zones enhances storage security. Zoning can isolate or restrict a single server to a specific LUN or many servers to a single LUN.

- With the use of zoning and LUN masking, the SAN can be restricted to eliminate multiple systems trying to access the same storage resources.

- This technique will also prevent a Windows filesystem trying to write to a Linux filesystem, which would corrupt the data on the storage array.

- LUN masking accomplishes the same goals as zoning but is implemented at the storage controller or HBA level and not in the SAN switching fabric that zoning uses.

Also Read: Traditional vs Virtual Storage Provisioning

Multipathing

- Multipathing technique will make the storage highly available, fault resistant, and less restrictive to the servers accessing the storage systems.

- More than one HBA can be installed inside the server, this will allow the operating system to use two or more HBAs and automatically fail over or reroute if a HBA should fail.

- Also, multiple SAN switches can be used to create more than one completely separate switching fabric.

- Multiple Fibre Channel ports can also be used to create multipathing, this serves the same purpose as multiple HBAs in that should one Fibre Channel port fail on a storage controller, the redundant port will take over.

- Storage is a critical component in any type of datacenters, and many measures are taken to protect and allow for high availability of the data and multipathing is one of the critical considerations to make.

Storage Access Methods

The storage space which is provisioned and assigned to the server will be in a raw storage format. This needs to be converted into compatible format based on the operating system of a server to utilize the storage space. Every operating system has different types of compatible formats and these are generally referred as Filesystems.

Also Read: What is Block based Storage Systems

A filesystem defines the format in which files are stored on storage media. The filesystem is a structured representation of a set of data that is being written to a disk partition. Below are some of the commonly used filesystem types used today.

Unix File System (UFS)

- This type of filesystem is found in most Unix or Linux OS filesystems.

- UFS is the most common filesystem for these operating systems and uses the familiar structure of /, a slash being the root of the filesystem’s multilevel tree structure.

- The root is at the top of the tree structure. Three levels follow: /bin, /usr, and /tmp.

- The file hierarchy flows down from these directories to complete the UFS.

Extended File System (EXT)

- This filesystem type is used in the various distributions of the Linux operating system and comes in many variants, such as EXT2, 3, and 4.

- The differences are in the number of files that can be stored, the size of the files and volumes, and the number of tables in the directory.

- While there are many different filesystem offerings in Linux, EXT is the most common. EXT has metadata and is based off the UFS.

New Technology File System (NTFS)

- This is a Microsoft-developed filesystem that was released with the first version of the Windows NT operating system.

- NTFS features include access control and encryption. The master file table contains all the information about the file stored on the disk, including the size, allocation, and name.

File Allocation Table (FAT)

- This filesystem is a basic filesystem that is found on Microsoft operating systems which is also compatible with MS-DOS.

- This filesystem is replaced by the newer NTFS filesystem from Microsoft. However, many systems still support the FAT filesystem for backward compatibility, and FAT is still common in memory sticks and floppy drives.

Virtual Machine File System (VMFS)

- This type of filesystem is developed by VMware. VMFS is used in the vSphere system as a storage filesystem for virtual machine images.

- VMFS was designed exclusively for the virtualized environment and supports many features, such as virtual machine migrations, the ability to add virtual disk space, and recovery mechanisms.

- VMFS is intended to ease provisioning of virtual machines and provides such features as point-in-time, or snapshot, disk images that are used as backups and for cloning and testing.

Zettabyte File System (ZFS)

- This type of filesystem was developed by Sun Microsystems for the Solaris OS.

- ZFS is used in clustered or distributed storage systems.

Also Read: What are File Based Storage Systems

Storage Network Technologies

In large enterprise and cloud datacneters, Organizations use storage systems which contains hundreds and thousands of HDD or SSD drives. These drives are grouped and connected over a network and then shared with the servers based on the requirements.

The following techniques are used to create a network of storage devices in order to implement the techniques described in previous sections such as storage management, storage access and storage provisioning techniques.

Direct Attached Storage (DAS)

- This is a common and also the easiest method to implement. A computer, laptop, or other computing device that has its own storage directly connected is considered to be direct-attached storage.

- These devices can be hard drives, flash drives, or any other type of storage that is connected to the computer and not over a network.

- The connection used is commonly an ATA, SATA, or SCSI interface connecting the storage media to the motherboard of the computer.

Access Type

- Direct Attached Storage (DAS) uses block-level access technique to access the data on the storage devices, a server reads and writes directly to a hard disk rather than relying on a file level protocol like CIFS or NFS.

- Block-level access allows the reading and writing to a hard disk drive (HDD) at the physical hardware level. A disk controller in a server reads and writes the disks at the block level.

- Compared to a file-based system, block level is both a faster and a more efficient storage access method for an operating system.

- From the cloud perspective where everything is managed and accessed virtually, the cloud service provider can partition a storage array for exclusive use by each customer.

Advantages & Disadvantages

- This dedicated storage cannot be accessed or shared with any other customer in the cloud datacenter. One of the big advantages of dedicated storage that the cloud offers is that the consumer can pay for only what is needed at that moment and purchase more dedicated storage in the future as required.

- When scaling your storage operations in the cloud, it is both efficient and cost effective to purchase the storage needed by using the pay-as-you-grow model.

Network Attached Storage (NAS)

- Network-attached storage (NAS) is a file-level access of the data across a network.

- In a NAS configuration, files are sent over the network rather than blocks of data as in storage area networks (SANs).

- The data is not stored on a local computer, as with direct-attached storage, but over a LAN as network-attached storage.

- Files are sent over the LAN using a protocol such as TCP/IP. The data structures are dependent on the operating system used.

- For example, with Microsoft Windows, the Common Internet File System (CIFS) protocol is used. With Linux, Network File System (NFS) is one of several filesystems commonly implemented.

Access Type

- NAS uses File-level access technique and it is a common solution for sharing files between servers of users on the network. When storage data is available on a network, it can be shared with other users, applications, and computers.

Also Read: Difference between NAS, DAS and SAN

Advantages

- The advantage of shared storage on a network is that there is a central area where all users can access the data they need. This central repository reduces the duplication of the same files at multiple locations in a network.

- It also makes backing up and restoring data easy since the files are all located in one central area.

Storage Area Network (SAN)

- A Storage Area Network (SAN) is a very high speed, highly redundant, and completely dedicated to storage traffic.

- When a server accesses storage over a SAN network, the SAN must be completely lossless and highly available. The most common dedicated store networking technology is Fibre Channel.

- Typically two SAN networks are configured for redundancy, and the two networks operate in parallel to each other and are not interconnected. This architecture offers two paths from the server to the storage arrays, with a primary network and a standby ready to take over should the primary fail.

Also Read: Factors affecting SAN performance

Access Type

- Storage area network (SAN) uses block-level access mechanism to access the data on the storage devices and it is often found in larger computing environments such as in the cloud and enterprise data centers.

- With block-level access, a server reads and writes raw data directly to a hard disk. When a SAN is used, block data is transported across the network to the host bus adapter on the server.

- Protocols such as Fibre Channel, Fibre Channel over Ethernet (FCoE), or IP-based SCSI networks (commonly known as iSCSI) are used to make the block storage visible to the server.

- By using various storage networking protocols and access arrangements the stored data can be securily protected and still be shared in the cloud even when multiple clients are accessing the same storage hardware.

Also Read: How data is stored and retrieved from Object based Storage

Storage Access Protocols

Many different protocols are used in the storage world, some were developed specifically for storage whereas others borrow from traditional data networking. With virtualization and convergence taking place in the cloud, storage networking and data networking are being combined using the same protocols over a common network.

Following are the commonly used network protocols through a network

Internet Protocol (IP)

- This is the predominant communication protocol and all LANs will most likely be based off IP. On top of IP are two transport-level protocols – the User Datagram Protocol (UDP) and TCP. The IP portion provides the network addressing for both UDP and TCP.

- UDP protocol does not sends acknowledgement where there is no mechanism for retransmission. With TCP, a handshake sets up a session and keeps track of what data was sent and received.

- TCP will retransmit if there are any losses and is considered to be a reliable protocol for low data traffics.

Also Read: What is IP based SAN

Small Computer Storage Interface (SCSI)

- This protocol offers a standard set of signaling methods that allow for communications between the storage initiator and the storage provider or target.

- One of the limitations of SCSI is that it is a relatively short distance from the host to the storage drives due to cable restrictions.

Internet Small Computer Systems Interface (iSCSI)

- This protocol uses TCP/IP as a reliable transport protocol over Ethernet.

- iSCSI encapsulates the SCSI command set directly into Ethernet frames using the reliable Transmission Control Protocol (TCP), to ensure that the storage traffic is not lost across the network.

- There is no need for a SAN-based HBA installed in the server because a standard Ethernet card works for iSCSI traffic.

Also Read: What is Software defined Storage

Ethernet

- Block-level storage has limitations with the Ethernet protocol. Ethernet will drop a frame if there is congestion or collisions on the network and leave it up to the upper-level protocols to recover and resend the missing data.

- Many quality-of-service mechanisms can be implemented to prioritize the SAN traffic so that it remains lossless even over Ethernet, which can drop packets.

FC Fibre Channel

- Fibre Channel protocol is one of the predominant storage protocols in storage area networking.

- Fibre Channel encapsulates inside its headers the SCSI command set to allow the server and storage controllers to exchange information on disk read, write, and other types of operations between the host operating systems and the remote storage

FCoE With Fibre Channel over Ethernet (FCoE)

- In this type of protocol, storage traffic will be carried over the same network as Ethernet LAN traffic.

- This is often referred to as being a converged fabric. When the switch fabrics of Ethernet and Fibre Channel are combined, many advantages can be realized.

- For example, this approach reduces the cabling and complexity of running multiple data and storage networks inside the large datacenters.

Read more about Fibre Channel over Ethernet (FCoE) SAN

Each protocol has its unique advantages and disadvantages. For example, Fibre Channel is highly reliable and lossless because it was designed specifically for storage networking. However, it requires a completely separate network just for storage and a technical skill set different from traditional networking.

IP and iSCSI allow storage data to travel over traditional data networks, often mixed with traditional LAN data. However, a LAN can drop packets, and additional network management resources must be dedicated to ensure that the storage traffic is not adversely affected.

In the next post, we will learn the fundamental concepts of Network infrastructure and network protocols commonly used in traditional datacenters and Cloud datacenters. Network is another important component in IT world and it plays a key role to enable access to the applications in Cloud Computing.