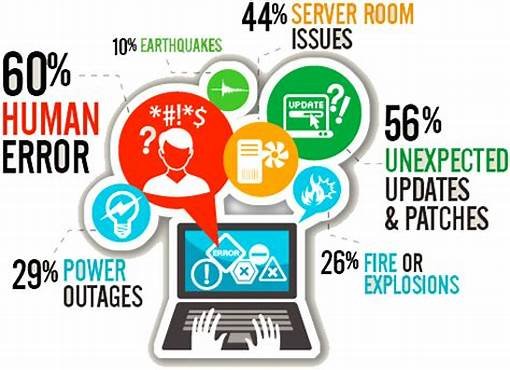

Cloud computing offers immense benefits, but it’s crucial to remember that outages are inevitable. This chapter emphasizes the importance of proactive Disaster Recovery planning and business continuity strategies to minimize downtime and ensure swift recovery.

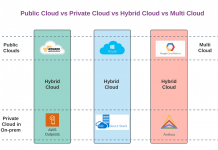

In the previous posts, we learned the concepts of Cloud Configurations and Cloud Deployments. In this post we will learn the importance of Business Continuity and Disaster Recovery strategies for Cloud based applications.

Introduction to Business Continuity (BC) and Disaster Recovery (DR)

Business Continuity (BC) and Disaster Recovery (DR) play a vital role in ensuring an organization’s business and IT resilience during unexpected disruptions

Business Continuity (BC) outlines how a business will proceed during and following a disaster. It encompasses strategies and plans to keep core business functions intact even under duress. BC minimizes financial losses, technological consequences, and provides peace of mind for employees and business owners.

It primarily focuses on

-

- Operational Continuity: Ensuring essential business processes continue seamlessly.

- Alternate Locations: Contingency plans for relocating operations if necessary.

- Smaller Interruptions: Addressing minor disruptions like power outages.

Also Read: Cloud Computing Quick Reference Guide

Disaster Recovery (DR) is the strategic plan that puts business into place for responding to catastrophic events. These events can include natural disasters, fires, cybercrimes, or acts of terror. DR plan takes below measures.

- Response Plans: How the business reacts during and immediately after the event.

- Return to Normal Operation: Measures to restore technical and communication systems.

- Speed Matters: DR aims to bring the organization back to safe, normal operation as quickly as possible.

Cloud computing operations can indeed be complex, and failures are inevitable. It’s crucial to anticipate these failures and prepare for disruptions in advance. Some outages, like web server failures, can be addressed by implementing multiple web servers behind a load balancer. Additionally, virtualization allows for quick movement of virtual machines to new hardware platforms during an outage. However, when an entire datacenter goes offline, recovery complexity significantly increases.

- Cloud Service Provider Resilience: When companies migrate computing operations to the cloud, cloud service providers maintain highly resilient hosting facilities. These facilities include redundant systems for power, cooling, networking, storage, and computing.

- Responsibility: Despite cloud providers’ efforts, it remains the organization’s responsibility to plan for and recover from disruptions within the cloud computing center.

- Natural Disasters and Infrastructure Outages: Natural disasters (e.g., weather-related events) or infrastructure outages (such as power failures) can impact cloud datacenters. Having robust recovery plans is essential.

Remember, a solid business continuity plan ensures that your critical systems remain operational even in the face of unexpected disruptions. By acknowledging the potential for disruptions and implementing robust business continuity plans, organizations can leverage the power of the cloud with confidence, ensuring their operations remain resilient and adaptable in the face of unforeseen challenges.

Why Business Continuity and Disaster Recovery are important ?

- Financial Impact: Without proper BC and DR plans, financial losses can escalate rapidly.

- Technological Consequences: Loss of sensitive data or critical systems.

- Peace of Mind: Clear policies for disaster response create a more comfortable work environment.

Note that, BC and DR are not interchangeable—they complement each other. While BC focuses on continuity during disruptions, DR concentrates on efficient recovery after serious incidents. Together, they form a robust safety net for organizations.

Difference between Business Continuity (BC) and Disaster Recovery (DR)

While both business continuity (BC) and disaster recovery (DR) are crucial for organizational resilience, they address different aspects of preparing for and responding to disruptions. Here’s a breakdown of their key differences:

Scope:

- Business Continuity: Focuses on maintaining essential business functions during and after any disruption, regardless of the cause. This includes IT outages, power failures, natural disasters, pandemics, or even cyberattacks.

- Disaster Recovery: Specifically addresses recovering IT infrastructure and data after a major disaster or outage, ensuring critical systems and applications are restored quickly.

Objectives:

- Business Continuity: Aims to minimize downtime and ensure continued business operations, even at a reduced capacity, to mitigate financial losses and reputational damage.

- Disaster Recovery: Prioritizes restoring full functionality of IT systems and data to normal operating levels as quickly as possible.

Activities:

- Business Continuity:

- Develops plans for alternative work arrangements (remote work, paper-based processes)

- Ensures communication protocols and decision-making processes during disruptions

- Identifies and prioritizes critical business functions

- Conducts training and exercises to prepare employees for various scenarios

- Disaster Recovery:

- Implements data backup and replication strategies

- Establishes failover mechanisms to switch to secondary systems

- Maintains backup infrastructure at geographically diverse locations

- Tests recovery procedures to ensure effectiveness

Relationship:

- Disaster Recovery is a subset of Business Continuity. A comprehensive BC plan encompasses DR strategies for IT systems and data, but also extends to broader aspects of business operations.

Analogy:

- Think of Business Continuity as a broad umbrella that protects your entire business from various disruptions. Disaster Recovery is a specific tool within that umbrella, specifically designed to handle major IT-related outages.

In conclusion, both Business Continuity and Disaster Recovery are essential for organizational preparedness. While DR focuses on recovering IT infrastructure, BC takes a holistic approach to ensuring business continuity in any disruptive scenario.

Cloud Disaster Recovery Methods:

In today’s digital world, downtime is a costly enemy. Cloud disaster recovery (DR) helps businesses prepare for and bounce back from unforeseen disruptions, ensuring critical applications and data remain accessible. But what are the key concepts underpinning this essential safeguard? Let’s delve into the world of redundancy and explore various DR methods.

Redundancy: Redundancy involves duplicating critical components – hardware, software, or data – to ensure continuous operation if one element fails. This forms the foundation of many DR strategies.

Essential DR Methods:

- Failover: Seamlessly switching to a backup system upon detecting a primary system failure.

- Geographical Diversity: Distributing data and applications across geographically separated locations to minimize the impact of localized disasters.

- Failback: Returning operations to the primary system after the disruption is resolved.

- Replication: Continuously copying data to a secondary location for quick recovery.

- Site Mirroring: Maintaining an identical copy of the production environment at a remote site for immediate failover.

Choosing the Right Approach:

- Hot Site: A fully functional replica of the production environment, ready for immediate use, but comes at a higher cost.

- Cold Site: Offers bare-metal infrastructure requiring manual configuration before use, but more cost-effective.

- Warm Site: A partially pre-configured site, allowing faster recovery compared to cold sites but less immediate than hot sites.

Additional Considerations:

- Backup and Recovery: Regularly backing up data provides a safety net for unforeseen events.

- Archiving and Offsite Storage: Long-term data retention requires secure offsite storage solutions.

- Replication Types: Synchronous replication ensures data consistency across sites, while asynchronous offers faster performance but may introduce slight data lag.

Understanding Key Metrics:

- RTO (Recovery Time Objective): Defines the acceptable downtime during a disaster.

- RPO (Recovery Point Objective): Specifies the maximum tolerable data loss in case of an outage.

- MTBF (Mean Time Between Failures): Average time between system failures.

- MTTR (Mean Time To Repair): Average time to restore functionality after a failure.

Mission Critical Requirements:

Organizations with critical applications require robust DR strategies, often utilizing a combination of methods based on their specific RTO, RPO, and budget constraints.

By understanding these concepts and methods, businesses can build a comprehensive DR plan, ensuring their cloud infrastructure remains resilient in the face of adversity. Remember, proper planning and preparation are key to navigating the unexpected and safeguarding your critical operations.

Solutions to meet Business Continuity and Disaster Recovery Requirements

1. Fault tolerance

Cloud computing environments are designed with fault tolerance as a core principle, ensuring services remain available to users even when individual components fail. This redundancy, built into both hardware and software, safeguards against unexpected disruptions and minimizes downtime.

Hardware Redundancy:

- Servers: Equipped with redundant power supplies, multiple CPUs, and LAN/SAN interfaces for storage.

- Storage: Utilizes RAID for data protection and often employs dual Fibre Channel fabrics for backup and failover capabilities.

- Networking: Redundant LAN backbones connect datacenters and the internet, ensuring traffic flow even if a switch or router fails.

- Datacenter Infrastructure: Backup power generation and redundant cooling systems maintain operations during outages.

Software-based Fault Tolerance:

- Virtualization: Enables seamless migration of virtual machines between servers or datacenters in case of hardware failure.

- Storage Virtualization: Allows data replication and backup across diverse locations, enhancing fault tolerance.

To Know more about Storage Virtualization

This multi-layered approach to fault tolerance provides a robust foundation for cloud services. By anticipating and mitigating potential failures, cloud providers ensure continuous operation and minimize service interruptions for users, fostering a reliable and resilient environment.

In essence, cloud computing proactively builds in safeguards to prevent disruptions, ensuring services remain available and operational even when faced with unexpected challenges.

2. High availability

High availability (HA) is very important for cloud services, ensuring near-constant uptime and minimizing service disruptions. It’s often measured as a percentage, with the coveted “five nines” representing 99.999% uptime, translating to a mere 5.26 minutes of downtime per year.

However, understanding these numbers requires careful consideration:

- Focus on unplanned downtime: Availability ratings typically exclude scheduled maintenance periods.

- Downtime definitions vary: Different providers might define “downtime” differently, impacting the reported figures.

Building Redundancy for Reliability:

Cloud providers leverage various strategies to achieve high availability:

- Availability Zones: Datacenters are divided into geographically isolated zones, each offering redundancy within its boundaries.

- High Availability Pairs: Critical devices like firewalls or load balancers are configured in pairs, constantly synchronizing state information. In case of a failure, the secondary device seamlessly takes over, ensuring uninterrupted service.

- Redundant Routers: Protocols like VRRP maintain a single gateway even when a router fails, automatically rerouting traffic to the backup router without service disruption.

The Responsibility Lies with You:

While cloud providers offer robust infrastructure, the ultimate responsibility for your site’s availability rests with you.

- Evaluate your needs: Determine the acceptable level of downtime based on your application’s criticality.

- Cost-benefit analysis: Higher availability often comes at a cost, so strike a balance between your needs and budget.

By understanding these concepts and tailoring your approach, you can leverage the power of cloud computing to ensure your applications remain accessible and resilient, meeting the ever-growing demands of today’s digital landscape.

3. Local clustering /geoclustering and Non-high availability resources

Let’s delve into the concepts of local clustering, geo-clustering, and managing non-high-availability resources in cloud deployments.

Local Clustering and Geo-Clustering:

-

- Local Clustering: In local clustering, a group of servers (both physical and virtual) is configured to appear as a single logical system. These servers work together to provide high availability. If one component fails, the system can continue operating because other components take over seamlessly. Local clustering is typically implemented within a single datacenter.

- Geo-Clustering: Geo-clustering takes fault tolerance to the next level. Servers are distributed across multiple cloud datacenters (geographically dispersed locations). This approach ensures high availability and disaster recovery. If one datacenter experiences an issue, the workload can be shifted to another datacenter without service interruption.

Non-High-Availability Resources:

-

- Not every system or service in a cloud deployment requires high availability. Some systems can afford to be unavailable for short periods without significant impact. Identifying these non-critical components allows cost optimization.

- For instance, consider a software test site hosted in the cloud. It may not justify the expense of deploying a hot site (a fully redundant backup) or any backup at all. By recognizing such non-high-availability systems, costs can be reduced.

- At the system level, deploying nonredundant components (such as power supplies and network connections) for these non-critical services further contributes to cost savings.

By understanding the nuances of clustering and selectively implementing high availability strategies, you can strike a balance between resilience, cost-effectiveness, and your specific application requirements. This ensures optimal resource allocation and maximizes the value proposition of your cloud deployment.

4. Multipathing

Multipathing thrives within redundant network designs, where critical components like switches, routers, load balancers, and firewalls are equipped with multiple interconnected links. This intricate web of connections offers several advantages:

- Failover: If a single link falters, the remaining paths seamlessly take over the traffic load, maintaining seamless connectivity.

- Load balancing: Traffic can be intelligently distributed across multiple active paths, optimizing network performance and preventing bottlenecks.

Unveiling Multipathing Techniques:

- Routing protocols: Most contemporary protocols support multipath routing, enabling the utilization of all available links between source and destination.

- Layer 2 solutions: Technologies like link aggregation, TRILL, and Cisco FabricPath empower multiple links to concurrently forward traffic within the datacenter, enhancing redundancy and performance.

- Storage area networks (SANs): Implementing a SAN_A/SAN_B configuration completely separates Fibre Channel fabrics. Should one fabric encounter an issue, the other ensures uninterrupted storage traffic flow between initiators and targets.

Read: Storage Area Networking Concepts

By embracing multipathing, organizations can construct robust and adaptable networks. This approach not only safeguards against unforeseen outages but also paves the way for efficient traffic management, ultimately fostering a reliable and high-performing network infrastructure.

5. Load balancing

In the dynamic world of cloud computing, load balancers play a pivotal role in ensuring scalability and resilience. They act as intelligent traffic directors, distributing incoming requests across a pool of computing resources, be it servers or applications.

Scaling on Demand:

- Load balancers facilitate horizontal scaling, enabling the seamless addition or removal of resources based on fluctuating workloads. This dynamic approach ensures optimal resource utilization and eliminates bottlenecks.

Resilience Through Redundancy:

- Load balancers continuously monitor the health of each server within the pool. In the event of a failure, the affected server is automatically excluded from the rotation, and connections are redirected to healthy resources. This redundancy mechanism safeguards against potential outages and guarantees uninterrupted service.

Global Reach and Fault Tolerance:

- Global server load balancing extends this functionality across geographically dispersed datacenters. By modifying DNS records, incoming requests are directed to the nearest or most suitable datacenter, optimizing performance and user experience.

- In the event of a datacenter outage, the global load balancing system seamlessly redirects traffic to remaining operational sites, ensuring service continuity and minimizing downtime.

In essence, load balancers act as the cornerstones of scalable and resilient cloud deployments. They orchestrate efficient resource utilization, safeguard against failures, and empower global reach, ultimately delivering a seamless and reliable user experience.