Artificial intelligence (AI) is rapidly transforming industries, offering significant advantages in efficiency, automation, and decision-making. However, the power of AI comes with inherent risks, such as bias, privacy concerns, and lack of transparency. Responsible AI practices aim to mitigate these risks and ensure AI systems are developed, deployed, and used ethically and fairly.

This post explores the concept of responsible AI, its importance, and how major cloud service providers, Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP), are implementing responsible AI principles within their General AI cloud services.

1.What is Responsible AI?

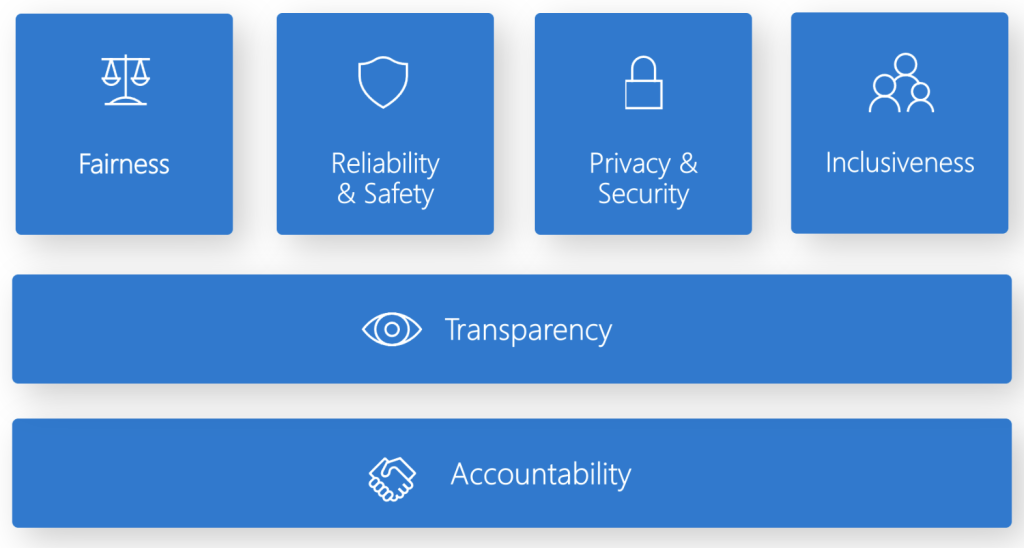

Responsible AI is a comprehensive approach to developing and using AI that prioritizes fairness, transparency, accountability, privacy, and security. It encompasses a set of principles and practices that ensure AI systems are:

Read: Generative AI Basics and Fundamentals

- Fair: Do not discriminate against any particular group of people based on factors like race, gender, or socioeconomic background.

- Transparent: Allow users to understand how the AI system arrives at a decision.

- Accountable: Clearly define who is responsible for the development, deployment, and use of the AI system.

- Private: Protect user data and ensure compliance with relevant data privacy regulations.

- Secure: Mitigate risks associated with cyberattacks and data breaches.

2. Why is Responsible AI Important?

There are several compelling reasons to prioritize responsible AI:

-

To Avoid Biased and Discriminatory Outcomes: AI models trained on biased data can perpetuate or amplify discrimination. Responsible AI practices help identify and mitigate bias in training data and model outputs.

-

To Build Trust and Transparency: Many AI models, particularly deep learning models, are complex and their decision-making processes can be opaque. Responsible AI focuses on developing models that are explainable, allowing users to understand the rationale behind a particular decision. This builds trust in the system and facilitates accountability.

Read: What are Large Language Models LLMs ?

-

To Ensure Data Privacy and Security: AI systems often handle sensitive data, raising privacy concerns. Responsible AI practices emphasize data security and user privacy by implementing robust data governance frameworks and anonymization techniques.

-

To Mitigate Safety Risks: AI systems deployed in critical areas, like healthcare or autonomous vehicles, require utmost reliability and safety. Responsible AI practices involve rigorous testing, validation, and risk mitigation strategies to ensure AI systems function safely and reliably.

3. Implementing Responsible AI in Cloud AI Services

Major cloud service providers are incorporating responsible AI principles into their General AI cloud services. Here’s how AWS, Azure, and GCP are approaching it:

-

Amazon Web Services (AWS):

- AWS Trustworthy AI: A comprehensive framework encompassing fairness, transparency, accountability, and privacy principles.

- Amazon SageMaker Clarify: A toolkit that helps identify and mitigate bias in machine learning models. For example, imagine a bank using SageMaker Clarify to detect bias in a loan approval AI model. The tool might reveal the model disproportionately rejects loan applications from a certain zip code. The bank can then investigate the cause of this bias and refine the training data to ensure fair loan decisions.

- AWS Audit Manager: A service that enables continuous monitoring and auditing of AI models to ensure compliance with regulations. For example, a healthcare provider can leverage Audit Manager to ensure their AI-powered medical diagnosis system adheres to HIPAA regulations concerning patient data privacy.

-

Microsoft Azure:

- Azure Responsible AI Service: Offers tools and best practices for building, deploying, and managing fair, accountable, and transparent AI solutions.

- Fairness tooling: Helps assess and mitigate bias in datasets and models. An Azure customer developing an AI for facial recognition can utilize fairness tooling to identify potential biases based on skin tone or ethnicity in the training data. This allows them to address the bias before deploying the system.

Read: Skills required to build GenAI apps on Cloud

-

- Explainable AI (XAI): Provides techniques to understand how models arrive at decisions. An insurance company using Azure can leverage XAI to understand the factors influencing an AI model’s decision to approve or deny an insurance claim. This transparency fosters trust in the system’s fairness.

-

Google Cloud Platform (GCP):

- AI Platform Fairness: A set of tools to identify and address bias in machine learning models. A company developing an AI-powered hiring tool on GCP can use AI Platform Fairness to analyze the training data for potential biases based on gender or educational background. This helps ensure the AI doesn’t discriminate against qualified candidates.

- Vertex Explainable AI (XAI): Enables developers to understand the factors influencing model predictions. A retailer using GCP can leverage Vertex XAI to understand why their AI-powered recommendation engine suggests certain products to customers. This transparency allows them to refine the recommendations and improve customer satisfaction.

- Data Loss Prevention (DLP): Protects sensitive data used in AI development and deployment. A company building a customer service chatbot on GCP can leverage DLP to ensure anonymization of customer data used to train the chatbot. This protects user privacy while enabling AI development.

4. Responsible AI practices for Azure OpenAI services:

Many Azure OpenAI models are generative AI models, which can be harmful. To mitigate these harms, Microsoft recommends a four-stage process. This process includes identifying potential harms, measuring their severity, and implementing mitigations. Some mitigation techniques include prompt engineering and content filters.

Read: How Cloud computing can address challenges faced in GenAI implementations

Microsoft suggests a 4-stage process to mitigate harms in Azure OpenAI models:

-

Identify: This stage involves recognizing potential harms that could arise from your AI system. You can achieve this through methods like iterative red team testing, stress testing, and analysis. The goal is to prioritize a list of potential harms specific to your scenario.

-

Measure: Once you have identified potential harms, this stage involves developing a method to systematically measure them. Microsoft recommends a combination of manual and automated measurement techniques. The aim is to establish metrics to assess the frequency and severity of the harms.

-

Mitigate: Here, the focus is on reducing the harms identified in the previous stages. It recommends a layered mitigation plan that incorporates four layers: model, safety system, application, and positioning.

-

Operate: This stage emphasizes the importance of defining a deployment and operational readiness plan. This includes establishing processes to monitor the system for new or recurring harms.

5. Responsible AI practices for Google Cloud AI services:

Google’s Responsible AI practices focuses on the development of AI and the challenges of ensuring fairness, interpretability, privacy, and safety. Google’s approach includes using a human-centered design and considering potential biases in the data. It is also important to collect a diverse set of data and to continually monitor the model’s performance. AI systems should be designed to be clear and accountable to users.

Google’s Responsible AI practices focus on ensuring fairness, interpretability, privacy, and safety in AI development. Here are the key points:

-

Human-Centered Design: Google emphasizes a human-centered approach to AI development, ensuring AI systems are designed to serve and benefit people.

-

Fairness and Bias: A critical aspect of responsible AI is acknowledging and mitigating potential biases in data used to train AI models. Google recommends collecting diverse datasets and employing techniques to identify and address bias.

-

Interpretability and Explainability: It highlights the importance of developing AI models that are interpretable. This means users should be able to understand how the model arrives at a particular decision.

-

Privacy and Security: Protecting user privacy and data security is paramount. Google emphasizes implementing robust security measures and adhering to data privacy regulations.

-

Monitoring and Evaluation: Responsible AI is an ongoing process. Google highlights the need for continuous monitoring of AI systems to identify and address any emerging issues or biases.

6. Responsible AI practices for AWS AI services:

AWS offers a comprehensive approach to responsible AI through its AWS Trustworthy AI framework and a suite of tools integrated within its General AI cloud services. Here’s a breakdown of key practices and how AWS helps implement them:

1. Fairness:

- Identifying Bias: Manually reviewing training data for imbalances or using tools like Amazon SageMaker Clarify to detect potential bias based on demographics.

- Mitigating Bias: Techniques like data augmentation to balance datasets, removing sensitive attributes, or employing fairness filters during model training.

2. Transparency and Explainability:

- Explainable AI (XAI): Techniques like feature attribution methods within SageMaker to understand the factors influencing model predictions.

- Model documentation: Clearly documenting the model’s purpose, limitations, and training data used.

3. Accountability and Governance:

- AWS Audit Manager: Enables continuous monitoring and auditing of AI models to track performance and identify potential issues.

- Establishing clear ownership: Defining roles and responsibilities for AI development, deployment, and ongoing monitoring.

4. Privacy and Security:

- Data anonymization: Techniques like tokenization or differential privacy to protect sensitive data used in training models.

- Data access controls: Implementing IAM roles and policies to restrict access to sensitive data used in AI development.

- AWS Security services: Utilizing services like Amazon KMS for encryption and Amazon GuardDuty for threat detection to secure AI infrastructure.

7. Benefits of Implementing Responsible AI

By implementing responsible AI practices, organizations can have several benefits:

- Reduced Risk of Bias and Discrimination: Responsible AI practices help identify and mitigate bias in AI systems, leading to fairer and more ethical outcomes.

- Increased Trust and Transparency: Explainable AI models foster trust and transparency, allowing users to understand how AI systems arrive at decisions.

- Enhanced Data Privacy and Security: Responsible AI practices ensure robust data governance and security measures, protecting user data and mitigating security risks.

- Improved AI System Performance: Continuous monitoring and evaluation practices can identify areas for improvement in AI models, leading to more accurate and reliable performance.

- Compliance with Regulations: Many industries have regulations concerning data privacy and AI development. Responsible AI practices help organizations adhere to these regulations and avoid legal repercussions.

Read: Cloud Networking Basics and Fundamentals

Responsible AI is an ongoing journey, not a destination. As AI technology continues to evolve, so too will the need for responsible development and deployment practices. Cloud service providers like AWS, Azure, and GCP play a crucial role in providing tools and resources that empower developers to build trustworthy and ethical AI systems. By prioritizing fairness, transparency, accountability, privacy, and security, we can ensure that AI benefits everyone and contributes to a more positive future.