This blog post dives into the world of Generative AI (Gen AI), starting with the fundamentals. It breaks down what Gen AI is and what it isn’t, then explores its evolution. The post will compare Gen AI to traditional machine learning models and clarify the differences between Machine Learning, Artificial Intelligence, Deep Learning, and Gen AI. Finally, it will delve into popular Generative Models, explore the concept of Generative AI Hallucinations, and discuss the drawbacks and limitations of this technology.

1. What is Generative AI ?

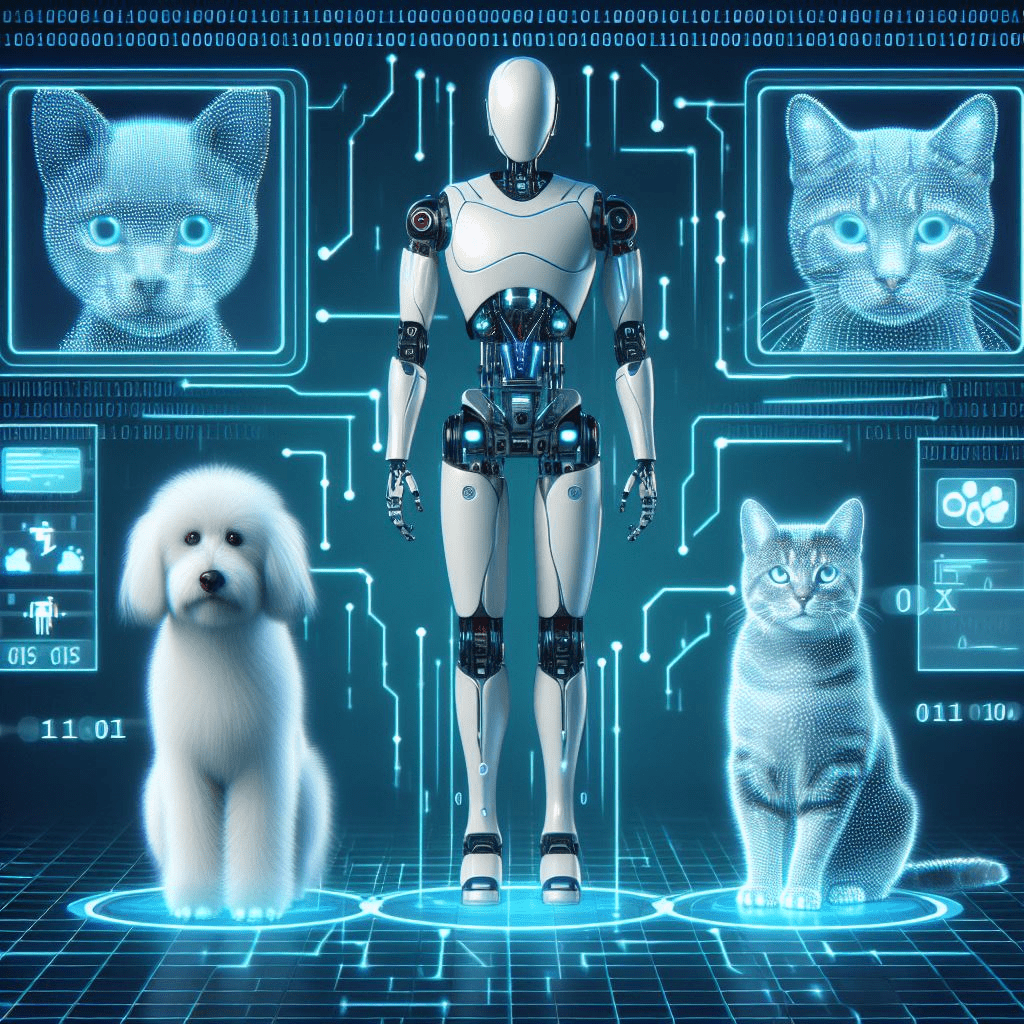

Generative AI, or Generative Artificial Intelligence, refers to AI systems capable of creating new content such as text, images, videos, or other data using generative models. These models learn from large datasets to recognize patterns and structures, enabling them to generate new data that resembles the input they were trained on. This technology has seen significant advancements in the early 2020s, particularly with transformer-based deep neural networks and large language models. For example, below image is created for the purpose of this blog post by Generative AI model. The model was trained to generate an image of a Dog and Cat.

Generative AI has a wide range of applications across various industries, including software development, healthcare, finance, entertainment, customer service, sales, marketing, art, writing, fashion, and product design. However, it also raises concerns about potential misuse, such as in creating deepfakes or contributing to job losses.

Generative AI has a wide range of applications across various industries, including software development, healthcare, finance, entertainment, customer service, sales, marketing, art, writing, fashion, and product design. However, it also raises concerns about potential misuse, such as in creating deepfakes or contributing to job losses.

What is not Generative AI ?

Gen AI works by analyzing massive amounts of existing data to learn the patterns and relationships within it. This data could be text, code, images, or anything else that can be digitized. Once the AI has learned these patterns, it can use them to generate new content that is similar to the data it was trained on, but also original.

Here are some examples of things that are not gen AI:

- Search engines: These tools find existing information based on your search query, they don’t create new content.

- Chatbots that answer questions with pre-programmed responses: While they can hold a conversation, they are limited to the information they are trained on.

- Ruling-based systems: These follow a specific set of rules to make decisions, they can’t adapt or generate new responses.

2. Evolution of Generative AI

The evolution of Generative AI (GenAI) has been a journey of innovation and discovery, marked by several key milestones.

- 1950s: The concept of AI emerged with pioneers like Alan Turing and John McCarthy, who laid the groundwork for machines mimicking human intelligence.

- 1980s-1990s: Early generative models like Hidden Markov Models (HMMs) and Gaussian Mixture Models (GMMs) were developed, focusing on generating sequences from statistical patterns.

- 2000s: Advancements in machine learning led to the creation of more sophisticated models like Restricted Boltzmann Machines (RBMs) and Variational Autoencoders (VAEs).

- 2014: The introduction of Generative Adversarial Networks (GANs) by Ian Goodfellow and colleagues revolutionized image generation with two neural networks competing to improve data quality.

- 2010s-2020s: The rise of deep learning and neural networks, particularly transformers and large language models, propelled GenAI forward, enabling the creation of diverse and complex content.

Read: GenAI Basics and Fundamentals

Throughout its evolution, GenAI has expanded from simple pattern generation to creating intricate and creative content, significantly impacting various industries and sparking ethical discussions. Today, generative AI continues to evolve with new techniques and cloud-based tools, shaping the future of various industries.

Generative AI recent breakthroughs

Recent breakthroughs in Generative AI are making waves across different areas:

- More Realistic Outputs: Techniques like Stable Diffusion, an open-source model, are generating incredibly realistic images from text descriptions. This is pushing the boundaries of what AI can create visually.

- Multimodal AI: New models are combining different data types like text, images, and audio. This allows for tasks like generating videos with corresponding soundtracks or creating music based on written descriptions.

- Emotionally Aware AI: Advancements are enabling virtual assistants to understand and respond to human emotions, improving customer service interactions and creating more natural human-computer interfaces.

- Improved Prompt Engineering: The way we “talk” to generative AI models, through prompts and instructions, is becoming more crucial. Research is focusing on crafting better prompts to achieve more accurate and creative outputs.

- Video Synthesis: Generating realistic and dynamic video sequences is becoming a reality. This has applications in filmmaking, animation, and even creating training simulations.

3. How Gen AI differs from traditional machine learning models

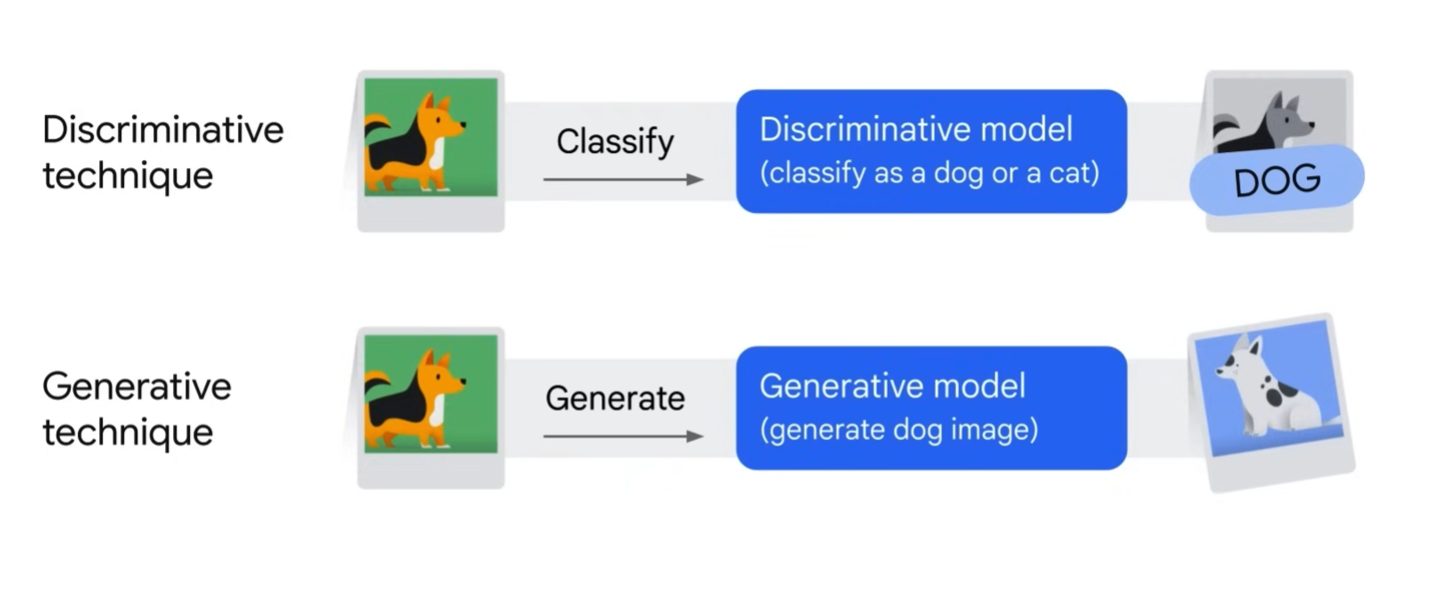

The key difference between Generative AI (GenAI) and traditional machine learning (ML) lies in their goals

Traditional Machine Learning: Analyzes existing data to make predictions or classifications. It learns patterns and relationships within data to perform tasks like spam filtering, image recognition, or stock price prediction.

Generative AI: Focuses on creating entirely new data. It learns the underlying structure of data and uses that knowledge to generate new, original content that resembles the training data. This could be realistic images, creative text formats, or even novel musical pieces.

Here’s a summary table comparing Generative AI with traditional machine learning:

| Aspect | Generative AI | Traditional Machine Learning |

|---|---|---|

| Purpose | Generate new data similar to the training set. | Make predictions or classifications based on existing data. |

| Data Handling | Learns the distribution of data to create new content. | Analyzes patterns in data, often requiring labeled data. |

| Model Types | Uses models like GANs and VAEs for data creation. | Uses models like decision trees and SVMs for analysis. |

| Learning Approach | Unsupervised or semi-supervised learning. | Supervised learning with labeled datasets. |

| Complexity | More complex and computationally intensive. | Less complex, varying computational needs. |

4. Difference between Machine Learning, Artificial Intelligence, Deep Learning and Generative AI (Gen-AI)

Here are the key differences between them from an Financial use case perspective.

Artificial Intelligence (AI):

This is the broad goal of creating intelligent machines that can perform tasks typically requiring human intelligence. In finance, this could encompass a wide range of applications, like:

- Algorithmic trading: Using AI algorithms to analyze market data and automatically execute trades.

- Fraud detection: Employing AI to identify suspicious financial activity and prevent fraud.

- Chatbots for customer service: Building chatbots powered by AI to answer customer inquiries and resolve basic issues.

Machine Learning (ML):

This is a subfield of AI that focuses on algorithms that can learn from data without explicit programming. In finance, ML plays a crucial role in:

- Credit risk assessment: Using ML models to analyze a borrower’s financial data and predict their creditworthiness.

- Stock price prediction: Developing ML models that analyze historical market data and attempt to predict future stock prices (important to note, prediction is not guaranteed).

- Customer segmentation: Employing ML to categorize customers based on their financial profiles and tailor marketing strategies accordingly.

Read: How to integrate GenAI into existing applications ?

Deep Learning (DL):

This is a specific type of ML that uses complex neural networks inspired by the human brain. Deep learning excels at tasks requiring pattern recognition from large datasets. In finance, deep learning finds applications in:

- High-frequency trading: Analyzing massive amounts of market data in real-time to identify short-term trading opportunities (often used by hedge funds).

- Sentiment analysis: Analyzing social media or news articles to understand investor sentiment and its potential impact on markets.

- Anomaly detection: Detecting unusual patterns in financial data that might signal fraud or other irregularities.

Generative AI (Gen-AI):

This is a specialized field of ML that focuses on creating entirely new data. In finance, Gen-AI could be used for:

- Generating financial reports: Developing AI models that analyze financial data and automatically generate reports with insights and recommendations.

- Creating synthetic financial data: Generating realistic but simulated financial data for training other AI models or for stress testing financial systems.

- Generating personalized investment recommendations: Building Gen-AI models that analyze an individual’s financial situation and risk tolerance to suggest suitable investment options.

Key Points:

- AI is the overarching concept, while ML, DL, and Gen-AI are specific approaches that fall under the AI umbrella.

- ML learns from data to improve specific tasks, while Gen-AI focuses on creating entirely new data.

- Deep learning excels at complex pattern recognition within large datasets, making it valuable for tasks like high-frequency trading.

Here is the summary of the differences between AI, ML, DL and Gen-AI technologies

| Term | Description | Finance Example |

|---|---|---|

| AI | Broad field of intelligent machines | Algorithmic trading, chatbots, fraud detection |

| ML | Learning from data for specific tasks | Credit risk assessment, stock price prediction (not guaranteed), customer segmentation |

| DL | Complex ML using neural networks | High-frequency trading, sentiment analysis, anomaly detection |

| Gen-AI | Creating entirely new content | Generating financial reports, synthetic data, personalized investment recommendations |

5. Commonly used Generative Models:

Several popular generative models excel at creating new data that mimics the original dataset. Here are a few key ones:

Generative Adversarial Networks (GANs):

- Concept: This powerful technique involves two competing neural networks

- Generator: Creates new data points by mimicking the original dataset.

- Discriminator: Tries to distinguish between real data points and those generated by the generator.

- Training Process: Through an iterative process, the generator improves its ability to fool the discriminator, while the discriminator becomes better at identifying fake data. This back-and-forth competition leads to the creation of increasingly realistic and diverse data.

- Applications: Image generation, video synthesis, creating realistic soundscapes.

Variational Autoencoders (VAEs):

- Concept: VAEs focus on learning a compressed representation of the original data (latent space) that captures its essential features.

- Process: The model encodes the original data into a latent space, then uses this code to generate new data points. This allows for control over specific data features during generation.

- Applications: Generating new images with specific attributes (e.g., generating a cat image with different fur colors), data compression, anomaly detection.

Transformer-based Models:

- Concept: Originally designed for machine translation, these models excel at capturing long-range dependencies within data, particularly text data.

- Process: Transformers analyze the relationships between words or elements within the original data and use this understanding to generate new sequences that maintain the same style and coherence.

- Applications: Text generation (e.g., creating realistic dialogue, writing different creative text formats), music generation, code generation.

Autoregressive Models:

- Concept: These models generate data sequentially, one element at a time, by predicting the next element based on the previous ones.

- Process: The model analyzes the existing sequence and predicts the most likely element to follow, then continues this process to build a new sequence.

- Applications: Text generation (e.g., generating realistic news articles, writing different creative text formats), code generation.

Choosing the right model depends on the specific type of data you want to generate and the desired level of control over the generation process. These are just a few examples, and the field of Generative AI is constantly evolving with new and innovative models emerging.

6. Generative AI Hallucinations

Generative AI hallucinations, sometimes called AI hallucinations, are basically incorrect or misleading outputs produced by generative AI models. Here are some examples to illustrate AI hallucinations

For example, ask an AI to write a news article about a new scientific discovery, but there isn’t enough data on the topic yet. The AI might fabricate details to fill in the gaps, making the story sound plausible but factually wrong. Another classic example is when an AI tasked with creating images generates a picture of a cat riding a bicycle. This might seem creative, but cats don’t ride bicycles and the image wouldn’t be realistic.

Read: Top AI trends in next 5 years

(Opens in a new browser tab)

Here’s why they occur:

- Limited Knowledge: Generative AI learns from data, and if that data has gaps or isn’t very comprehensive, the AI might make up information to fill those holes.

- Misinterpretations: The AI might analyze the data patterns incorrectly, leading to nonsensical or illogical outputs.

- Biased Data: If the training data is biased, the AI will reflect that bias and generate outputs that are skewed in a certain direction.

AI hallucinations can be a problem because they can be very convincing. The AI can produce fluent and grammatically correct text, or realistic-looking images, that contain false information. This can be misleading for users who trust the AI’s output to be accurate.

Here are some things to keep in mind to avoid being fooled by AI hallucinations:

- Be aware that generative AI models are not perfect and can make mistakes.

- Double-check any information you get from an AI with a trusted source.

- Look for signs that the output might be inaccurate, like illogical statements or factual inconsistencies.

As generative AI continues to develop, researchers are working on ways to reduce hallucinations. This includes using more comprehensive training data and developing methods for the AI to identify when it’s making unreliable predictions.

7. Generative AI Drawbacks and Limitations:

Generative AI, while powerful, comes with its own set of drawbacks and limitations. Here are some of the key challenges:

- Misuse and Fraud: Generative AI can be misused for deceptive purposes, like creating fake content.

- Creativity Impact: It might reduce the need for human creativity in fields like art and writing.

- Job Displacement: Automation by generative AI could lead to job loss in certain industries.

- Bias and Discrimination: Biased training data can perpetuate stereotypes.

- Data Quality Dependency: High-quality data is crucial for accurate generative AI.

- Ethical Concerns: It may unintentionally produce unethical content.

- Learning Impact: Overreliance on AI could hinder human skill development.

- Access Divide: Not everyone has equal access to generative AI tools.

- Remember, while generative AI is exciting, addressing these challenges is essential for responsible use!